Zelfstudie AZ-104 Microsoft Azure Administrato

Microsoft Learn course

Learning-paths in AZ-104 Azure Administrator series:

- https://learn.microsoft.com/en-us/training/paths/az-104-administrator-prerequisites/ AZ-104: Prerequisites for Azure administrators

- https://learn.microsoft.com/en-us/training/paths/az-104-manage-identities-governance/ AZ-104: Manage identities and governance in Azure

- https://learn.microsoft.com/en-us/training/paths/az-104-manage-storage/ AZ-104: Implement and manage storage in Azure

- https://learn.microsoft.com/en-us/training/paths/az-104-manage-compute-resources/ AZ-104 Deploy and manage Azure compute resources

- https://learn.microsoft.com/en-us/training/paths/az-104-manage-virtual-networks/ AZ-104: Configure and manage virtual networks for Azure administrators

- https://learn.microsoft.com/en-us/training/paths/az-104-monitor-backup-resources/

AZ-104: Implement and manage storage in Azure

General notes

Azure Storage supports three categories of data:

| type of data | description | storage example | |

|---|---|---|---|

| structured-data | stored in a relational format that has a shared schema. Tables are an autoscaling NoSQL store. | Using Azure Table Storage, Azure Cosmos DB, and Azure SQL Database | |

| unstructured-data | nonrelational | Using Azure Blob Storage and Azure Data Lake Storage. | |

| virtual-machine-data | disks and files. Disks are persistent block storage for Azure IaaS virtual machines. Files are fully managed file shares in the cloud. | Provided through Azure managed disks |

Azure storage services:

| storage service | description |

|---|---|

| Azure Blob storage | object storage - unstructured/nonrelational data - HTTP/S, REST, PowerShell, Azure CLI, client libraries |

| Azure Files | network file shares - SMB, NFS, REST, storage client libraries - storage account credentials |

| Azure Queue Storage | used with Azure Functions - store and retrieve messages - > 64KB |

| Azure Table Storage | nonrelational data (NoSQL data) - new Azure Cosmos DB Table API - schemaless |

Considerations choosing Azure storage services:

- Consider storage optimization for massive data. Azure Blob Storage is optimized for storing massive amounts of unstructured data. Objects in Blob Storage can be accessed from anywhere in the world via HTTP or HTTPS. Blob Storage is ideal for serving data directly to a browser, streaming data, and storing data for backup and restore.

- Consider storage with high availability. Azure Files supports highly available network file shares. On-premises apps use file shares for easy migration. By using Azure Files, all users can access shared data and tools. Storage account credentials provide file share authentication to ensure all users who have the file share mounted have the correct read/write access.

- Consider storage for messages. Use Azure Queue Storage to store large numbers of messages. Queue Storage is commonly used to create a backlog of work to process asynchronously.

- Consider storage for structured data. Azure Table Storage is ideal for storing structured, nonrelational data. It provides throughput-optimized tables, global distribution, and automatic secondary indexes. Because Azure Table Storage is part of Azure Cosmos DB, you have access to a fully managed NoSQL database service for modern app development.

Types of storage accounts:

- Standard general purpose

- Premium block blobs - blob storage - recommended for apps w. high transaction rates

- Premium file shares - Azure File Shares - if you require both SMB and NFS file shares

- Premium page blobs - Page blobs only - ideal for storing index-based and sparse data structures (operating systems, data disks for VMs, databases)

Azure Storage replication copies your data to protect from planned and unplanned events.

Redundant storage

Definition of a region: Many Azure regions provide availability zones, which are separated groups of datacenters within a region source

locally redundant storage: LRS can be appropriate in several scenarios: Your application stores data that can be easily reconstructed if data loss occurs. Your data is constantly changing like in a live feed, and storing the data isn’t essential. Your application is restricted to replicating data only within a location due to data governance requirement zone redundant storage: Zone redundant storage synchronously replicates your data across three storage clusters in a single region. Each storage cluster is physically separated from the others and resides in its own availability zone. geo-redundant storage: GRS replicates your data to another data center in a secondary region. The data is available to be read only if Microsoft initiates a failover from the primary to secondary region. Read-access geo-redundant storage (RA-GRS) is based on GRS. RA-GRS replicates your data to another data center in a secondary region, and also provides you with the option to read from the secondary region. With RA-GRS, you can read from the secondary region regardless of whether Microsoft initiates a failover from the primary to the secondary. Geo-zone redundant storage: Data in a GZRS storage account is replicated across three Azure availability zones in the primary region, and also replicated to a secondary geographic region for protection from regional disasters.

| Service | Default endpoint |

|---|---|

| Container service | //mystorageaccount.blob.core.windows.net |

| Table service | //mystorageaccount.table.core.windows.net |

| Queue service | //mystorageaccount.queue.core.windows.net |

| File service | //mystorageaccount.file.core.windows.net |

The following example shows how a subdomain is mapped to an Azure storage account to create a CNAME record in the domain name system (DNS):

Subdomain: blobs.contoso.com

Azure storage account: \<storage account>\.blob.core.windows.net

Direct CNAME record: contosoblobs.blob.core.windows.net

The service endpoints for a storage account provide the base URL for any blob, queue, table, or file object in Azure Storage.

Configure Azure Blob Storage

blob storage

Characteristics of blob-storage:

- Blob Storage can store any type of text or binary data. Some examples are text documents, images, video files, and application installers.

Blob Storage uses three resources to store and manage your data:

- An Azure storage account

- Containers in an Azure storage account

- Blobs in a container

To implement Blob Storage, you configure several settings:

- Blob container options.

- Blob types and upload options.

- Blob Storage access tiers.

- Blob lifecycle rules.

- Blob object replication options.

Configuration characteristics containers & blobs:

- All blobs must be in a container.

- Containers organize your blob storage.

- A container can store an unlimited number of blobs.

- An Azure storage account can contain an unlimited number of containers.

- You must create a storage container before you can begin to upload data.

- Container names must be unique within an Azure storage account

Public access level: The access level specifies whether the container and its blobs can be accessed publicly. By default, container data is private and visible only to the account owner. There are three access level choices:

- Private: (Default) Prohibit anonymous access to the container and blobs.

- Blob: Allow anonymous public read access for the blobs only.

- Container: Allow anonymous public read and list access to the entire container, including the blobs.

https://learn.microsoft.com/en-us/training/modules/configure-blob-storage/4-create-blob-access-tiers

blob access tiers

hot-tier: frequent read/writes #cool-tier: storing large amounts of infrequently accessed data that can remain in the tier for at least 30 days #cold-tier: intended for large amounts of infrequently accessed data that can remain in the tier for at least 90 days #archive-tier: The Archive tier is an offline tier that’s optimized for data that can tolerate several hours of retrieval latency.

blob lifecycle management

Azure Blob Storage supports lifecycle-management for data sets. It offers a rich rule-based policy for GPv2 and Blob Storage accounts. You can use lifecycle policy rules to transition your data to the appropriate access tiers, and set expiration times for the end of a data set’s lifecycle. Example life cycle management actions:

- Transition blobs to a cooler storage tier (Hot to Cool, Hot to Archive, Cool to Archive) to optimize for performance and cost.

- Delete current versions of a blob, previous versions of a blob, or blob snapshots at the end of their lifecycles.

- Apply rules to an entire storage account, to select containers, or to a subset of blobs using name prefixes or blob index tags as filters.

To configure these actions you use policy-rules:

- Setup programmatic rules (if/then) in the Azure portal

- if:

blobs were modified/created more than X days ago - then:

move to <selected blob access tier> storageordelete the blob

blob object replication

Object replication copies blobs in a container asynchronously according to policy rules that you configure.

Replication includes the blob content, metadata properties, and versions.

Things to know about blob object replication

There are several considerations to keep in mind when planning your configuration for blob object replication.

-

Object replication requires that blob versioning is enabled on both the source and destination accounts. When blob versioning is enabled, you can access earlier versions of a blob. This access lets you recover your modified or deleted data.

-

Object replication doesn’t support blob snapshots. Any snapshots on a blob in the source account aren’t replicated to the destination account.

-

Object replication is supported when the source and destination accounts are in the Hot, Cool, or Cold tier. The source and destination accounts can be in different tiers.

-

When you configure object replication, you create a replication policy that specifies the source Azure storage account and the destination storage account.

-

A replication policy includes one or more rules that specify a source container and a destination container. The policy identifies the blobs in the source container to replicate.

Things to consider when configuring blob object replication

There are many benefits to using blob object replication. Consider the following scenarios and think about how replication can be a part of your Blob Storage strategy.

-

Consider latency reductions. Minimize latency with blob object replication. You can reduce latency for read requests by enabling clients to consume data from a region that’s in closer physical proximity.

-

Consider efficiency for compute workloads. Improve efficiency for compute workloads by using blob object replication. With object replication, compute workloads can process the same sets of blobs in different regions.

-

Consider data distribution. Optimize your configuration for data distribution. You can process or analyze data in a single location and then replicate only the results to other regions.

-

Consider costs benefits. Manage your configuration and optimize your storage policies. After your data is replicated, you can reduce costs by moving the data to the Archive tier by using lifecycle management policies.

Consider versioning when using object replication

You can enable Blob versioning to automatically maintain previous versions of an object. When blob versioning is enabled, you can access earlier versions of a blob. This access lets you recover your modified or deleted data.

Manage blobs

3 types of blob:

- block blob (most blob storage scenarios use blobs - ideal for text & binary data - default type for new blob)

- append blob (useful for logging)

- page blob (frequent read/write - Azure VMs use page blobs for OS disks/data disks)

Tools available for file/blob management:

- Azure Storage Explorer (GUI)

- AzCopy (CLI)

- Azure Data Box Disk (ship physical SSDs to Microsoft)

Determine blob pricing

Cost of block Blob Storage depends on:

- Volume of data stored per month.

- Quantity and types of operations performed, along with any data transfer costs.

- Data redundancy option selected.

Considerations for Azure storage account and Blob Storage:

- Performance tiers. The Blob Storage tier determines the amount of data stored and the cost for storing that data. As the performance tier gets cooler, the per-gigabyte cost decreases.

- Data access costs. Data access charges increase as the tier gets cooler. For data in the Cool and Archive tiers, you’re billed a per-gigabyte data access charge for reads.

- Transaction costs. There’s a per-transaction charge for all tiers. The charge increases as the tier gets cooler.

- Geo-replication data transfer costs. This charge only applies to accounts that have geo-replication configured, including GRS and RA-GRS. Geo-replication data transfer incurs a per-gigabyte charge.

- Outbound data transfer costs. Outbound data transfers incur billing for bandwidth usage on a per-gigabyte basis. This billing is consistent with general-purpose Azure storage accounts.

- Changes to the storage tier. If you change the account storage tier from Cool to Hot, you incur a charge equal to reading all the data existing in the storage account. Changing the account storage tier from Hot to Cool incurs a charge equal to writing all the data into the Cool tier (GPv2 accounts only).

Summary

Main takeaways:

- Azure Blob Storage is a powerful solution for storing unstructured data in the cloud, such as text documents, images, and videos.

- Blob Storage offers different access tiers (Hot, Cool, Cold, and Archive) to optimize performance and cost based on the usage patterns of your data.

- You can configure lifecycle management policies to automatically transition data between access tiers and set expiration times for data.

- Object replication allows you to asynchronously copy blobs between containers in different regions, providing redundancy and reducing latency for read requests.

Configure Azure Storage security

Review Azure Storage security strategies

-

Encryption at rest. Storage Service Encryption (SSE) with a 256-bit Advanced Encryption Standard (AES) cipher encrypts all data written to Azure Storage. When you read data from Azure Storage, Azure Storage decrypts the data before returning it. This process incurs no extra charges and doesn’t degrade performance. Encryption at rest includes encrypting virtual hard disks (VHDs) with Azure Disk Encryption. This encryption uses BitLocker for Windows images, and uses dm-crypt for Linux.

-

Encryption in transit. Keep your data secure by enabling transport-level security between Azure and the client. Always use HTTPS to secure communication over the public internet. When you call the REST APIs to access objects in storage accounts, you can enforce the use of HTTPS by requiring secure transfer for the storage account. After you enable secure transfer, connections that use HTTP will be refused. This flag will also enforce secure transfer over SMB by requiring SMB 3.0 for all file share mounts.

-

Encryption models. Azure supports various encryption models, including server-side encryption that uses service-managed keys, customer-managed keys in Key Vault, or customer-managed keys on customer-controlled hardware. With client-side encryption, you can manage and store keys on-premises or in another secure location.

-

Authorize requests. For optimal security, Microsoft recommends using Microsoft Entra ID with managed identities to authorize requests against blob, queue, and table data, whenever possible. Authorization with Microsoft Entra ID and managed identities provides superior security and ease of use over Shared Key authorization.

-

RBAC. RBAC ensures that resources in your storage account are accessible only when you want them to be, and to only those users or applications whom you grant access. Assign RBAC roles scoped to an Azure storage account.

-

Storage analytics. Azure Storage Analytics performs logging for a storage account. You can use this data to trace requests, analyze usage trends, and diagnose issues with your storage account.

definitie shared-key: Shared Key authorization relies on your Azure storage account access keys and other parameters to produce an encrypted signature string. The string is passed on the request in the Authorization header. definitie shared-access-signature: A SAS delegates access to a particular resource in your Azure storage account with specified permissions and for a specified time interval. definitie anonymous-read-access: Read requests to public containers and blobs don’t require authorization.

Create shared access signatures

You can provide a SAS to clients who shouldn’t have access to your storage account key. By distributing a SAS URI to these clients, you grant them access to a resource for a specified period of time. You’d typically use a SAS for a service where users read and write their data to your storage account.

definitie user-delegation-SAS: secured with Microsoft Entra credentials and also by the permissions specified for the SAS. A user delegation SAS is supported for Blob Storage and Data Lake Storage,

definitie account-level-SAS: to allow access to anything that a service-level SAS can allow, plus other resources and abilities. For example, you can use an account-level SAS to allow the ability to create file systems.

definitie service-level-SAS: to allow access to specific resources in a storage account. You’d use this type of SAS, for example, to allow an app to retrieve a list of files in a file system, or to download a file.

definitie stored-access-policy: can provide another level of control when you use a service-level SAS on the server side. You can group SASs and provide other restrictions by using a stored access policy.

Recommendations shared-access-signature:

- Always use HTTPS for creation and distribution

- Reference stored access policies when possible

- Set near-term expiry times for an unplanned SAS

- Require clients automatically renew SAS

- Plan carefully for the SAS start time

Identify URI and SAS parameters

When you create your shared access signature (SAS), a uniform resource identifier (URI) is created by using parameters and tokens. The URI consists of your Azure Storage resource URI and the SAS token.

| Parameter | Example | Description |

|---|---|---|

| Resource URI | https://myaccount.blob.core.windows.net/ ?restype=service &comp=properties | Defines the Azure Storage endpoint and other parameters. This example defines an endpoint for Blob Storage and indicates that the SAS applies to service-level operations. When the URI is used with GET, the Storage properties are retrieved. When the URI is used with SET, the Storage properties are configured. |

| Storage version | sv=2015-04-05 | For Azure Storage version 2012-02-12 and later, this parameter indicates the version to use. This example indicates that version 2015-04-05 (April 5, 2015) should be used. |

| Storage service | ss=bf | Specifies the Azure Storage to which the SAS applies. This example indicates that the SAS applies to Blob Storage and Azure Files. |

| Start time | st=2015-04-29T22%3A18%3A26Z | (Optional) Specifies the start time for the SAS in UTC time. This example sets the start time as April 29, 2015 22:18:26 UTC. If you want the SAS to be valid immediately, omit the start time. |

| Expiry time | se=2015-04-30T02%3A23%3A26Z | Specifies the expiration time for the SAS in UTC time. This example sets the expiry time as April 30, 2015 02:23:26 UTC. |

| Resource | sr=b | Specifies which resources are accessible via the SAS. This example specifies that the accessible resource is in Blob Storage. |

| Permissions | sp=rw | Lists the permissions to grant. This example grants access to read and write operations. |

| IP range | sip=168.1.5.60-168.1.5.70 | Specifies a range of IP addresses from which a request is accepted. This example defines the IP address range 168.1.5.60 through 168.1.5.70. |

| Protocol | spr=https | Specifies the protocols from which Azure Storage accepts the SAS. This example indicates that only requests by using HTTPS are accepted. |

| Signature | sig=F%6GRVAZ5Cdj2Pw4tgU7Il STkWgn7bUkkAg8P6HESXwmf%4B | Specifies that access to the resource is authenticated by using a Hash-Based Message Authentication Code (HMAC) signature. The signature is computed with a key using the SHA256 algorithm, and encoded by using Base64 encoding. |

Determine Azure Storage encryption

When you create a storage account, Azure generates two 512-bit storage account access keys for that account. These keys can be used to authorize access to data in your storage account via Shared Key authorization, or via SAS tokens that are signed with the shared key.

Options for storage account encryption:

- Infrastructure encryption (entire storage account or encryption scope within the account)

- Platform-managed keys (customers don’t interact with the keys - the keys are used for Azure Data Encryption-At-Rest)

- Customer-managed keys (#CMK) (stored in customer-managed key vault or hardware security module (#HMS))

Apply Azure Storage best practices

Use Storage Insights to/for:

- Detailed metrics & logs (KPIs like latency, throughput, capacity utilization, transactions)

- Enhanced security & compliance (actionable insights, alerts)

- RBAC

- Unified View

Configure Azure Files

Introduction

definitie Azure-Files offers fully managed file shares in the cloud that are accessible via industry standard protocols (SMB, NFS, HTTP). #definitie Azure-File-Sync is a service that allows you to cache several Azure File shared on an on-premises Windows Server or cloud virtual machine.

Comparison of Azure Files ←> Azure Blob Storaeg

| Azure Files (file shares) | Azure Blob Storage (blobs) |

|---|---|

| Azure Files provides SMB, NFS, client libraries & REST | Azure Blob provides client libraries & REST |

| Files are true directory objects - data is accessed through file shares across VMs | Blobs are a flat namespace - Blob data is accessed through a container |

| Ideal for lift-and-shift apps using the native file system APIs | Ideal for aps that need to support streaming and random-access scenarios |

| Store development and debugging tools used across VMs | Access application data from anywhere |

Azure Files tiers:

- Premium (SSD - available only in FireStorage storage account kind - available in LRS/ZRS redundancy - not available in all Azure regions

- Standard (HDDs) - general-purpose file shares and dev/test environments - LRS/ZRSGRS/GZRS - in all Azure regions

Authentication methods supported by Azure Files:

- Identity-based over SMB (single-sign-on experience similar to accessing on-premises file shares)

- Access key (static - provide full access - best practice not to share and use identity-based authentication)

- Shared access signature (#SAS) token - URI based on the storage access key - provides restricted access rights (allowed permissions, start and expiry times, allowed IP addresses, allowed protocols) - only used for REST API access from code to Azure Files

Important for SMB traffic for Azure Files:

- Open TCP port 445

- Enable secure transfer with the

Secure transfer requiredsetting (HTTPS)

Azure Files snapshots:

- provided at the file share level

- only data changed since last snapshot is saved (incremental)

- share snapshots can be retrieved from a single file

- when you delete a file, you delete the snapshots as well

Soft-delete in Azure Files:

- enabled at the storage account level

- configurable retention period of soft deletes (1-365 days)

- can be enabled for existing and new file shares

Azure-Storage-Explorer is a standalone application that makes it easy to work with Azure Storage data on Windows, macOS, and Linux.

cloud-tiering is an optional feature where frequently accessed files are cached locally on the server while all other files are tiered to Azure Files based on policy settings. When a file is tiered, Azure File Sync replaces the file locally with a pointer. A pointer is commonly referred to as a reparse point. The parse point represents a URL to the file in Azure Files.

Summary and resources

- Azure Files provides the SMB and NFS protocols, client libraries, and a REST interface that allows access from anywhere to stored files.

- Azure Files is ideal to lift and shift an application to the cloud that already uses the native file system APIs. Share data between the app and other applications running in Azure.

- Azure Files offers two industry-standard file system protocols for mounting Azure file shares: the Server Message Block (SMB) protocol and the Network File System (NFS) protocol.

- Azure Files offers two types of file shares: standard and premium. The premium tier stores data on modern solid-state drives (SSDs), while the standard tier uses hard disk drives (HDDs).

- File share snapshots capture a point-in-time, read-only copy of your data.

- Soft delete allows you to recover your deleted file share.

- Azure Storage Explorer is a standalone application that makes it easy to work with stored data on Windows, macOS, and Linux.

- Azure File Sync enables you to cache file shares on an on-premises Windows Server or cloud virtual machine.

test exam notes

If you want to run a Docker container as an Azure web service, you must configure the Publish option and select Docker container.

To enable POSIX-compliant access control lists (ACLs), the hierarchical namespace must be used. The remaining options are valid for a storage account, but do not provide the POSIX-compliant feature.

AZ-104: Deploy and manage Azure compute resources

Re Azure resources that make up a VM: - The VM itself - Disks for storage Se- Virtual network - Network interface to communicate on the network - Network Security Group (NSG) to secure the network traffic - An IP address (public, private, or both)

| Re| Option| Description| |-------------------------------------------------------------------------------------------|-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------| | General purpose | General-purpose VMs are designed to have a balanced CPU-to-memory ratio. Ideal for testing and development, small to medium databases, and low to medium traffic web servers. | Pl|Compute optimized| Compute optimized VMs are designed to have a high CPU-to-memory ratio. Suitable for medium traffic web servers, network appliances, batch processes, and application servers.| | Memory optimized | Memory optimized VMs are designed to have a high memory-to-CPU ratio. Great for relational database servers, medium to large caches, and in-memory analytics. | | Storage optimized | Storage optimized VMs are designed to have high disk throughput and IO. Ideal for VMs running databases. | | GPU | GPU VMs are specialized virtual machines targeted for heavy graphics rendering and video editing. These VMs are ideal options for model training and inferencing with deep learning. | | High performance compute | High performance compute is the fastest and most powerful CPU virtual machines with optional high-throughput network interfaces. |

VM pricing:

- no separate costs for NICs but there is a maximum

- no additional charges for NSGs

- no charge for local disk storage, OS disk charged at regular rate for disks, different costs for Standard/Premium SSDs

test exam notes

ARM templates use the copy property to deploy multiple instances of a resource, such as two virtual machines, in a single deployment.

deployment: virtual-machine-scale-set can be configured from the availability options.

You can detach a disk from a running virtual machine (#hot-removal).

Characteristics of App Service Plans:

- The Standard service plan can host unlimited web apps, up to 50 GB of disk space, and up to 10 instances. The plan will cost approximately $0.10/hour.

- The Free plan only offers 1 GB of disk size and 0 instances to host the app.

- The Premium plan offers 250 GB of disk space and up to 30 instances and will cost approximately $0.20/hour.

- The Basic plan offers 10 GB of disk space and up to three virtual machines.

The Basic app service plan does not support automatic scaling - you must scale up the plan to Premium (or higher) to support automatic scaling. After that you must configure a scaling condition, based on a metric (CPU), which will automatically trigger scaling (out) of the app service web app.

DNS for Azure App Service webapps: For web apps, you create either an A (Address) record or a CNAME (Canonical Name) record. An A record maps a domain name to an IP address. A CNAME record maps a domain name to another domain name. DNS uses the second name to look up the address. Users still see the first domain name in their browser. If the IP address changes, a CNAME entry is still valid, whereas an A record must be updated.

On Azure portal blades about ARM deployments: Navigating to the Diagnostics settings blade provides the ability to diagnose errors or review warnings. Navigating to the Metrics blade provides metrics information (CPU, resources) to users. On the Deployments blade for the resource group (app-grp1), all the details related to a deployment, such as the name, status, date last modified, and duration, are visible. Navigating to the Policy blade only provides information related to the policies enforced on the resource group.

On Azure Spot: Azure Spot instances allow you to provision virtual machines at a reduced cost, but these virtual machines can be stopped by Azure when Azure needs the capacity for other pay-as-you-go workloads, or when the price of the spot instance exceeds the maximum price that you have set. These virtual machines are good for dev, testing, or for workloads that do not require any specific SLA.

Azure Service Bus: Azure Container Apps allows a set of triggers to create new instances, called replicas. For Azure Service Bus, an event-driven trigger can be used to run the escalation method. The remaining scale triggers cannot use a scale rule based on messages in an Azure service bus.

ad hoc SAS: An ad hoc SAS allows you to directly specify the start time and expiry time within the SAS token itself without requiring a stored access policy. This makes it ideal for granting time-limited access—such as 30 days—to a third-party application.

AZ-104: Configure and manage virtual networks for Azure administrators

NSGs act as software firewalls, applying custom rules to each inbound or outbound request at the network interface and subnet level

virtual-network-service-endpoints: secure Azure service resources exclusively to your virtual networks, enabling private IP addresses to reach Azure services without requiring public IP addresses.

Characteristics of a network-security-group NSG:

- A network security group contains a list of security rules that allow or deny inbound or outbound network traffic.

- A network security group can be associated to a subnet or a network interface.

- A network security group can be associated multiple times.

- You create a network security group and define security rules in the Azure portal.

- Each network interface that exists in a subnet can have zero, or one, associated network security groups.

- Each subnet can have a maximum of one associated network security group.

Security rules for an NSG:

| setting | value |

|---|---|

| source | any,IP,service tag,application security group |

| protocol | TCP,UDP,ICMP |

| etc | etc |

- You can’t remove the default security rules

- You can override a default security rule by creating another rule with a higher Priority

- Inbound rule processing: rules for subnets ⇒ rules for NICs

- Outbound rule processing: rules for NICs ⇒ subnets

- General processing: rules for intra-subnet traffic (VMs in the same subnet)

- Priority: lower the value, the higher priority for the rule

Default rules inbound:

| priority | name | port | protocol | source | destination | action |

|---|---|---|---|---|---|---|

| 65000 | AllowVnetInbound | Any | Any | VirtualNetwork | VirtualNetwork | Allow |

| 65001 | AllowAzureLoadBalancerInbound | Any | Any | AzureLoadBalancer | Any | Allow |

| 65500 | DenyAllInbound | Any | Any | Any | Any | Deny |

Default rules outbound:

| priority | name | port | protocol | source | destination | action |

|---|---|---|---|---|---|---|

| 65000 | AlloWVnetOutBound | Any | Any | VirtualNetwork | VirtualNetwork | Allow |

| 65001 | AllowInternetOutbound | Any | Any | Any | Internet | Allow |

| 65500 | DenyAllOutBound | Any | Any | Any | Any | Deny |

definition: application-security-groups ASG

Application security groups (ASG):

- You can join VM to an ASG → use ASG as source/destination in NSG rules

- By organizing VMs into ASGs, you don’t need to distribute servers across specific subnets

- Helps eliminate multiple rule sets (for each VM f.e.) and less maintenance

- service-tags can help: they represent a group of IP address prefixes from a specific Azure service

summary: of network-security-group:

- Network security groups are essential for controlling network traffic in Azure virtual networks.

- NSG rules are evaluated and processed based on priority and can be created for subnets and network interfaces.

- Effective NSG rules can be achieved by considering rule precedence, intra-subnet traffic, and managing rule priority.

- Application security groups provide an application-centric view of infrastructure and simplify rule management.

You can use a network security group (#NSG) to be assigned to a network interface. NSGs can be associated with subnets or individual virtual machine instances within that subnet. When an NSG is associated with a subnet, the access control list (ACL) rules apply to all virtual machine instances of that subnet.

The SKU value (Basic or Standard) must match the SKU of the Azure load balancer with which the address is used.

Test exam notes

Features in Azure-Monitor:

- alert rule: Azure Monitor data indicates an issue, an alert is triggered

- action groups: notify users about alerts and take action - collection of notification preferences

The alert state is manually set by the user and does not have any automated logic behind it. The alert state can be either New, Acknowledged, or Closed.

Characteristics of Azure-Network-Watcher:

- Azure Network Watcher is a regional service that allows you to monitor and diagnose conditions at a network scenario level in, to, and from Azure. When you create or update a virtual network in a subscription, Network Watcher will be enabled automatically in the virtual network’s region.

- IP flow verify lets you specify a source and destination IPv4 address, port, protocol (TCP or UDP), and traffic direction (inbound or outbound).

- NSG flow logs is a feature of Azure Network Watcher that allows you to log information about IP traffic flowing through an NSG.

- Packet capture allows you to create packet capture sessions to track traffic to and from a virtual machine.

Azure Monitor workbook chart visualizations: Data shown on a shared dashboard can only be displayed for a maximum of 30 days.

definitie Desired-State-Configuration (#DSC) is a management platform that you can use to manage an IT and development infrastructure with configuration as code.

on VPN connectivity and virtual network peering: Point-to-Site (P2S) VPN clients must be downloaded and reinstalled again after virtual network peering is successfully configured to ensure that the new routes are downloaded to the client.

on virtual network link:

- To associate a virtual network to a private DNS zone, you add the virtual network to the zone by creating a virtual network link.

- Azure DNS Private Resolver is used to proxy DNS queries between on-premises environments and Azure DNS.

- A custom DNS server will work if you deploy a DNS server as a virtual machine or an appliance, however, this configuration does not work with a private DNS zone.

Azure Front Door is Microsoft’s advanced cloud Content Delivery Network (CDN). Azure Bastion is a service that lets you connect to a virtual machine by using a browser, without exposing RDP and SSH ports.

Azure public and private DNS: Azure Private DNS allows for private name resolution between Azure virtual networks. Azure public DNS provides DNS for public access, such as name resolution for a publicly accessible website. Azure-provided name resolution does not support user-defined domain names and only supports a single virtual network. A DNS server on a virtual machine can also be used to achieve the goal but involves much more administrative effort to implement and maintain than using Azure Private DNS.

On even traffic distribution with Azure Load Balancer: Disabling session persistence ensures even traffic distribution by removing any affinity that directs traffic to the same VM. Adjusting the load balancing rule settings might seem like a solution but does not address the root cause of uneven distribution. Enabling source IP affinity maintains session persistence, potentially exacerbating the uneven distribution of traffic. Adding more VMs does not solve the distribution issue caused by session persistence settings.

On route tables: Azure automatically creates a route table for each subnet on an Azure virtual network and adds system default routes to the table. You can override some of the Azure system routes with custom user-defined routes and add more custom routes to route tables. Azure routes outbound traffic from a subnet based on the routes on a subnet’s route table.

On registering VMs in a private DNS zone: To associate a virtual network to a private DNS zone, you add the virtual network to the zone by creating a virtual network link. Azure DNS Private Resolver is used to proxy DNS queries between on-premises environments and Azure DNS.

AZ-104: Manage identities and governance in Azure

Visualisation of Microsoft Azure Active Directory, now replaced by Microsoft Entra ID, and its interconnections with on-premise and cloud environments.

Coming from an on-premises perspective, it’s important to state the differences between Active Directory (Domain Services (AD DS or traditionally called ‘Active Directory’)).

First, some characteristics of Entra ID:

- Microsoft Entra ID is a different service, much more focused on providing identity management services to web-based apps, unlike AD DS, which is more focused on on-premises apps.

- The term tenant represents an individual Microsoft Entra instance. Within an Azure subscription, you can create multiple Microsoft Entra tenants. Entra-ID-tentants

- The prefix, derived from the name of the Microsoft account you use to create an Azure subscription or provided explicitly when creating a Microsoft Entra tenant, is followed by the onmicrosoft.com suffix.

Second, some key characteristics of Active Directory/AD DS:

On DNS and Microsoft Entra ID:

Each Microsoft Entra tenant is assigned the default Domain Name System (DNS) domain name, consisting of a unique prefix. The prefix, derived from the name of the Microsoft account you use to create an Azure subscription or provided explicitly when creating a Microsoft Entra tenant, is followed by the onmicrosoft.com suffix. Adding at least one custom domain name to the same Microsoft Entra tenant is possible and common. This name utilizes the DNS domain namespace that the corresponding company or organization owns.

On access tokens for Entra ID users: Once authenticated Microsoft Entra ID builds an access token to authorize the user and determine what resources they can access and what they can do with those resources.

Entra ID users are defined in three ways:

- cloud identities

- directory-synchronised identities (exist in an on-premise Active Directory synced via Entra ID Connect)

- guest users

After you delete a user, the account remains in a suspended state for 30 days. During that 30-day window, the user account can be restored, along with all its properties.

definitie application-class servicePrincipal-class: Separating these two sets of characteristics allows you to define an application in one tenant and use it across multiple tenants by creating a service principal object for this application in each tenant. Microsoft Entra ID creates the service principal object when you register the corresponding application in that Microsoft Entra tenant.

| AD DS | Microsoft Entra |

|---|---|

| AD DS is a true directory service, with a hierarchical X.500-based structure. | Microsoft Entra ID is primarily an identity solution, and it’s designed for internet-based applications by using HTTP (port 80) and HTTPS (port 443) communications. |

| AD DS uses Domain Name System (DNS) for locating resources such as domain controllers. | Microsoft Entra ID is a multi-tenant directory service. |

| You can query and manage AD DS by using Lightweight Directory Access Protocol (LDAP) calls. | Microsoft Entra users and groups are created in a flat structure, and there are no OUs or GPOs. |

| AD DS primarily uses the Kerberos protocol for authentication. | You can’t query Microsoft Entra ID by using LDAP; instead, Microsoft Entra ID uses the REST API over HTTP and HTTPS. |

| AD DS uses OUs and GPOs for management. | Microsoft Entra ID doesn’t use Kerberos authentication; instead, it uses HTTP and HTTPS protocols such as SAML, WS-Federation, and OpenID Connect for authentication, and uses OAuth for authorization. |

| AD DS includes computer objects, representing computers that join an Active Directory domain. | Microsoft Entra ID includes federation services, and many third-party services such as Facebook are federated with and trust Microsoft Entra ID. |

| AD DS uses trusts between domains for delegated management. |

Entra ID for custom web-apps: you can enable Microsoft Entra authentication for the Web Apps feature of Azure App Service directly from the Authentication/Authorization blade in the Azure portal. By designating the Microsoft Entra tenant, you can ensure that only users with accounts in that directory can access the website. It’s possible to apply different authentication settings to individual deployment slots. (bron)

P1:

- Self-service group management.

- Advanced security reports and alerts.

- Multi-factor authentication.

- Microsoft Identity Manager (MIM) licensing. MIM integrates with Microsoft Entra ID P1 or P2 to provide hybrid identity solutions.

- Enterprise SLA of 99.9%.

- Password reset with writeback.

- Cloud App Discovery feature of Microsoft Entra ID.

- Conditional Access based on device, group, or location. Microsoft Entra Connect Health.

P2:

- Microsoft-Entra-ID-Protection. This feature provides enhanced functionalities for monitoring and protecting user accounts. You can define user risk policies and sign-in policies. In addition, you can review users’ behavior and flag users for risk.

- Microsoft-Entra-Privileged-Identity-Management. This functionality lets you configure additional security levels for privileged users such as administrators. With Privileged Identity Management, you define permanent and temporary administrators. You also define a policy workflow that activates whenever someone wants to use administrative privileges to perform some task.

Microsoft-Entra-Domain-Services provides several benefits for organizations, such as:

- Administrators don’t need to manage, update, and monitor domain controllers.

- Administrators don’t need to deploy and manage Active Directory replication.

- There’s no need to have Domain Admins or Enterprise Admins groups for domains that Microsoft Entra ID manages.

If you choose to implement Microsoft Entra Domain Services, you need to be aware of the service’s current limitations. These include:

- Only the base computer Active Directory object is supported.

- It’s not possible to extend the schema for the Microsoft Entra Domain Services domain.

- The organizational unit (OU) structure is flat and nested OUs aren’t currently supported.

- There’s a built-in Group Policy Object (GPO), and it exists for computer and user accounts.

- It’s not possible to target OUs with built-in GPOs. Additionally, you can’t use Windows Management Instrumentation filters or security-group filtering.

test exam notes

To assign licenses to users based on Microsoft Entra ID attributes, you must create a dynamic security group and configure rules based on custom attributes. The dynamic group must be added to a license group for automatic synchronization. All users in the groups will get the license automatically. Microsoft Entra evaluates the users in the organization that are in scope for an assignment policy rule and creates assignments for the users who don’t have assignments to an access package; automatic assignment policies are not used for licensing.

Invite external users:

- External collaboration settings let you specify which roles in your organization can invite external users for B2B collaboration. These settings also include options for allowing or blocking specific domains and options for restricting which external guest users can see in your Microsoft Entra directory.

- Conditional Access allows you to apply rules to strengthen authentication and block access to resources from unknown locations.

- Cross-tenant access settings are used to configure collaboration with a specific Microsoft Entra organization.

- Access reviews are not used to control who can invite guest users.

Only Microsoft Entra ID P1 and P2 support SSPR, but Microsoft Entra ID P1 is the lower cost option.

When you assign licenses to a Microsoft Entra group, the licenses are consumed only by the members of the group, not by the group’s owners.

Administrative units are containers used for delegating administrative roles to manage a specific portion of Microsoft Entra. Administrative units cannot contain Azure virtual machines.

The User Administrator role allows creation and management of users and groups, managing support tickets, and monitoring service health. The Global Administrator has more permissions than required. The Billing Administrator is focused on financial aspects and the Service Administrator is a classic role with full access to Azure services, which is not required for user and group management.

AZ-104: Prerequisites for Azure administrators

<<<<<<< Updated upstream <<<<<<< Updated upstream

Deploy Azure infrastructure by using JSON ARM templates

=======

Stashed changes

AZ-104: Monitor and backup Azure resources

Introduction to Azure Backup

<<<<<<< Updated upstream

To add a resource to your template, you need to know the resource provider and its types of resources. The syntax for this combination is in the form of {resource-provider}/{resource-type}. For example, to add a storage account resource to your template, you need the Microsoft.Storage resource provider. One of the types for this provider is storageAccount. So your resource type is displayed as Microsoft.Storage/storageAccounts.

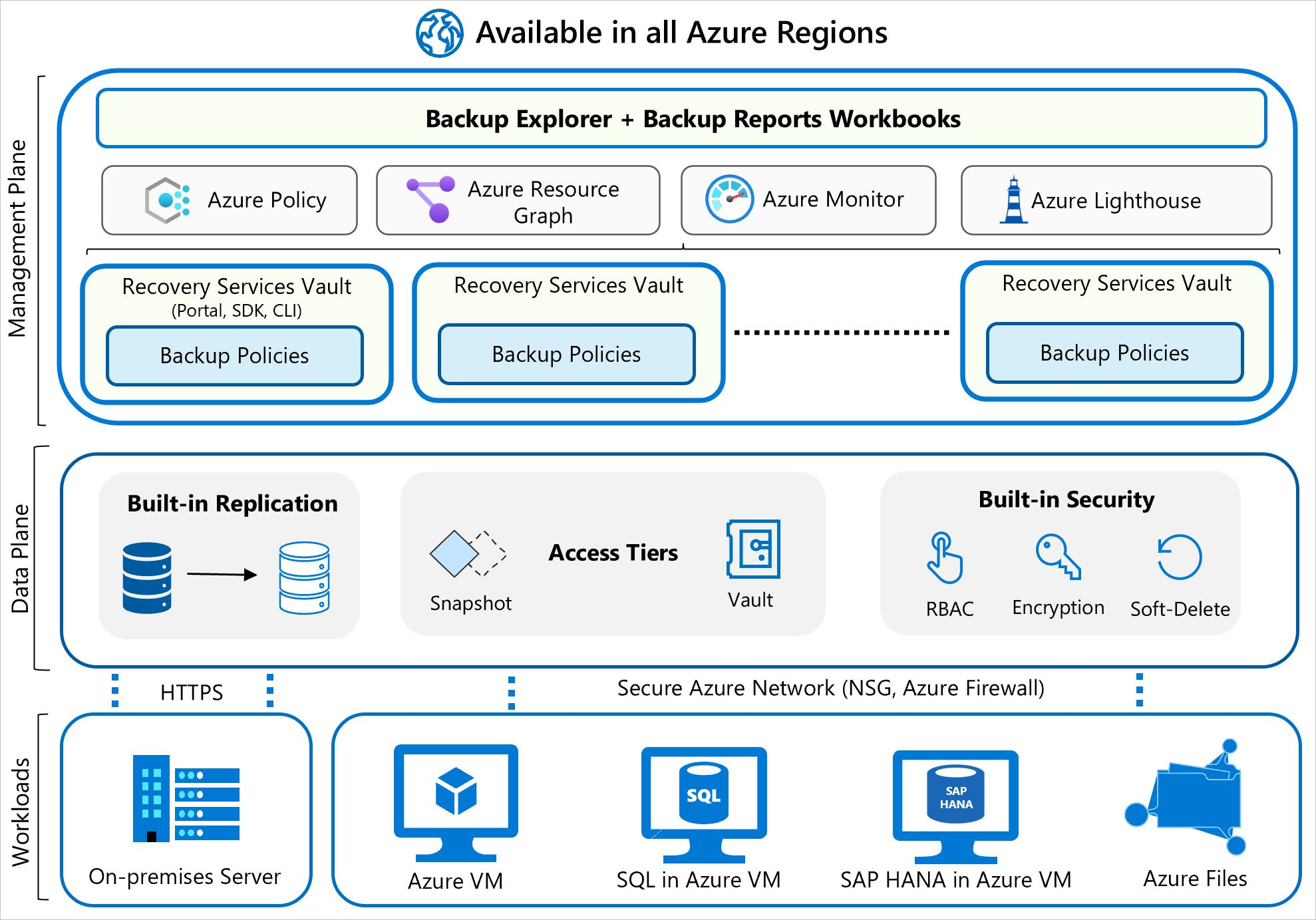

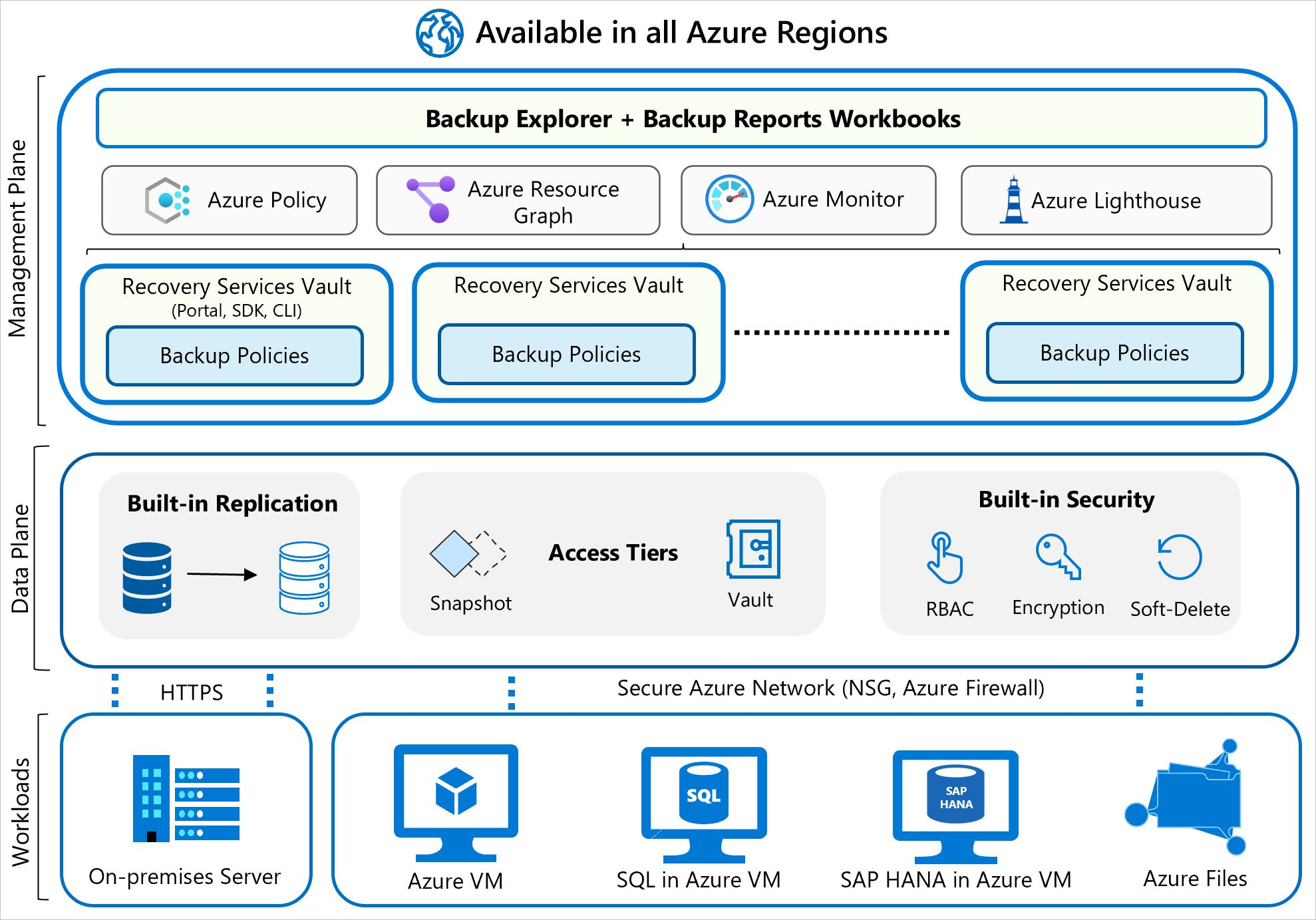

AZ-104: Monitor and backup Azure resources

Azure Backup can provide backup services for the following data assets:

On-premises files, folders, and system state

Azure Virtual Machines (VMs)

Azure Managed Disks

Azure Files Shares

SQL Server in Azure VMs

SAP HANA (High-performance Analytic Appliance) databases in Azure VMs

Azure Database for PostgreSQL servers

Azure Blobs

Azure Database for PostgreSQL - Flexible servers

Azure Database for MySQL - Flexible servers

Azure Kubernetes cluster

The simplest explanation of Azure Backup is that it backs up data, machine state, and workloads running on on-premises machines and VM instances to the Azure cloud. Azure Backup stores the backed-up data in Recovery Services vaults and Backup vaults. For on-premises Windows machines, you can back up directly to Azure with the Azure Backup Microsoft Azure Recovery Services (MARS) agent.

If you only want to back up the files, folders, and system state on the Azure VMs, you can use the Microsoft Azure Recovery Services (MARS) agent.

- Workload integration layer

- Backup-Extension: Integration with the actual workload, such as Azure virtual machines (VMs) or Azure Blobs, happens at this layer.

- Data Plane

- Access Tiers: There are three access tiers where the backups could be stored: #Snapshot-tier - snapshot taken and stored along with the disk, faster than restoring from vault. #Standard-tier - backup data is stored in vault. #Archive-tier - long-term retention backup data, rarely accessed, stored for compliance needs.

- Availability and Security: The backup data is replicated across zones or regions, based on the redundancy the user specifies.

- Management Plane

- Recovery Services vault/Backup vault and Backup center: The vault provides an interface for the user to interact with the backup service.

test exam notes

=======

AZ-104: Monitor and backup Azure resources

Introduction to Azure Backup

Stashed changes

What is Azure Backup

Source of below Commandos

The simplest explanation of Azure Backup is that it backs up data, machine state, and workloads running on on-premises machines and VM instances to the Azure cloud. Azure Backup stores the backed-up data in Recovery Services vaults and Backup vaults.| commando | type | Resultaat | For on-premises Windows machines, you can back up directly to Azure with the Azure Backup Microsoft Azure Recovery Services (MARS) agent. |-------------------------------|------------------|-----------------------------------------------| | New-AzVM | Azure PowerShell | creates a VM inside your subscription | How do recovery time objective and recovery point objective work: | az-login | Azure CLI | test | Definitie Recovery-Time-Objective (#RTO): measured in time how soon a business process must be restored to avoid unacceptable consequences. | az vm create | Azure CLI | test | Definitie Recovery-Point-Objective (#RPO): measured in time the maximum amount of data loss (so how long ago the latest backup should be run) | New-AzResourceGroupDeployment | Azure PowerShell | Adds an Azure deployment to a resource group. |

How Azure Backup works

definitie backup-extension: installed on a source VM or worker VM, generates the (storage (Azure VM, Azure Files) or stream (SQL, Analytic Appliance (HANA))) backup

Source of below ```PowerShell deploymentName=“addSkuParameter-”+“$today” New-AzResourceGroupDeployment `

-

Workload integration layer -Name $deploymentName `

- Backup-Extension: Integration with the actual workload, such as Azure virtual machines (VMs) or Azure Blobs, happens at this layer. -TemplateFile $templateFile `

-

Data Plane -storageName {your-unique-name} ` #Snapshot-tier - snapshot taken and stored along with the disk, faster than restoring from vault. -storageSKU Standard_GRS #Standard-tier - backup data is stored in vault. ``` #Archive-tier - long-term retention backup data, rarely accessed, stored for compliance needs.

-

Availability and Security: The backup data is replicated across zones or regions, based on the redundancy the user specifies. Aantekeningen

-

Management Plane

- Recovery Services vault/Backup vault and Backup center: The vault provides an interface for the user to interact with the backup service. Azure management options:

-

Azure portal

-

Azure PowerShell and Azure Command Line Interface (CLI)

-

Azure Cloud Shell (web-based CLI)

-

Azure mobile app

Azure Cloud Shell

Select the Cloud Shell icon (>_) to create a new Azure Cloud Shell session.

When to use Azure backup

Azure Backup provides in-built job monitoring for operations such as configuring backup, backing up, restoring, deleting backups, and so on. Azure Backup is scoped to the vault, making it ideal for monitoring a single vault.

If you need to monitor operational activities at scale, Backup-Explorer provides an aggregated view of your entire backup estate, enabling detailed drill-down analysis and troubleshooting. It’s a built-in Azure Monitor workbook that provides a single, central location to help you monitor operational activities across the entire backup estate on Azure, spanning tenants, locations, subscriptions, resource groups, and vaults.

Protect your virtual machines by using Azure Backup

Azure Backup features and scenarios

Differences Azure Backup and Azure Site Recovery:

- Azure Backup: maintains copies of stateful data that allow you to go back in time

- Site Recovery: replaces data in almost real time and allows for a failover

Azure backup benefits:

- Zero-infrastructure backup

- Long-term retention

- Security

- Azure role-based access control (RBAC)

- Encryption of backups

- No internet connectivity required (happens on the Azure backbone)

- Soft delete (data is retained for ⇒ 14 days after deletion)

- Enhanced soft delete (retain a deleted item in the soft delete state for a longer period)

- High availability (three types of replication)

- Locally redundant storage (LRS: replicates data 3x in a single data center in 1 primary region)

- geo-redundant storage (GRS (+1 availability zones in 1 region))

- zone-redundant storage (ZRS (3 availability zones))

Back up an Azure virtual machine by using Azure Backup

definitie Recovery-services-vault: a storage-management entity which acts as a RBAC-boundary and does not require managing storage accounts (which are created automatically in a separate fault domain)

Options in snapshot consistency:

- Application consistent: captures VM as a whole (uses VSS on Windows VMs, requires pre-/post-scripts on Linux to capture application state)

- File system consistent: if VSS fails on Windows or pre-/post-scripts on Linux, a file system snapshot is still made

- crash consistent: occurs if the VM is shut down at the time of backup - no I/O operations are captured - doesn’t guarantee data consistency

Azure Backup supports Selective-Disk-backup-and-restore using Enhanced-policy. This way you can selectively backup a subset of the data disks attached to your VM.

The VM backup policy supports two access tiers:

- snapshot tier: stored locally for a maximum of 5 days - fastest method recovery method - AKA ‘instant restore’

- vault tier: all snapshots are transferred to the vault as well - recovery point changes to ‘snapshot and vault’

Networking

You can use a network security group (#NSG) to be assigned to a network interface. NSGs can be associated with subnets or individual virtual machine instances within that subnet. When an NSG is associated with a subnet, the access control list (ACL) rules apply to all virtual machine instances of that subnet.

The SKU value (Basic or Standard) must match the SKU of the Azure load balancer with which the address is used.

virtual-network-service-endpoints: secure Azure service resources exclusively to your virtual networks, enabling private IP addresses to reach Azure services without requiring public IP addresses.

Stashed changes

By default, backups of virtual machines are kept for 30 days.

<<<<<<< Updated upstream Microsoft Azure Backup Server (MABS) is a solution designed to protect various workloads, including virtual machines (VMs), applications, and files. It allows users to manage backups from a single console, supporting both on-premises and Azure-based environments.

Object replication can be used to replicate blobs between storage accounts. Before configuring object replication, you must enable blob versioning for both storage accounts, and you must enable the change feed for the source account.

Security rules for an NSG:

| setting | value |

|---|---|

| source | any,IP,service tag,application security group |

| protocol | TCP,UDP,ICMP |

| etc | etc |

- You can’t remove the default security rules

- You can override a default security rule by creating another rule with a higher Priority

- Inbound rule processing: rules for subnets ⇒ rules for NICs

- Outbound rule processing: rules for NICs ⇒ subnets

- General processing: rules for intra-subnet traffic (VMs in the same subnet)

- Priority: lower the value, the higher priority for the rule

Default rules inbound:

| priority | name | port | protocol | source | destination | action |

|---|---|---|---|---|---|---|

| 65000 | AllowVnetInbound | Any | Any | VirtualNetwork | VirtualNetwork | Allow |

| 65001 | AllowAzureLoadBalancerInbound | Any | Any | AzureLoadBalancer | Any | Allow |

| 65500 | DenyAllInbound | Any | Any | Any | Any | Deny |

Default rules outbound:

| priority | name | port | protocol | source | destination | action |

| 65000 | AlloWVnetOutBound | Any | Any | VirtualNetwork | VirtualNetwork | Allow | | 65001 | AllowInternetOutbound | Any | Any | Any | Internet | Allow | | 65500 | DenyAllOutBound | Any | Any | Any | Any | Deny |

application-security-groups ASG

Application security groups (ASG):

- You can join VM to an ASG → use ASG as source/destination in NSG rules

- By organizing VMs into ASGs, you don’t need to distribute servers across specific subnets

- Helps eliminate multiple rule sets (for each VM f.e.) and less maintenance

- service-tags can help: they represent a group of IP address prefixes from a specific Azure service

summary of network-security-group:

- Network security groups are essential for controlling network traffic in Azure virtual networks.

- NSG rules are evaluated and processed based on priority and can be created for subnets and network interfaces.

- Effective NSG rules can be achieved by considering rule precedence, intra-subnet traffic, and managing rule priority.

- Application security groups provide an application-centric view of infrastructure and simplify rule management.

ARM

<<<<<<< Updated upstream

Deploy Azure infrastructure by using JSON ARM templates

Toelichting ARM-template:

ARM templates are JavaScript Object Notation (JSON) files that define the infrastructure and configuration for your deployment. The template uses a declarative syntax. The declarative syntax is a way of building the structure and elements that outline what resources look like without describing the control flow. Declarative syntax is different than imperative syntax, which uses commands for the computer to perform. Imperative scripting focuses on specifying each step in deploying the resources.

ARM templates are idempotent, which means you can deploy the same template many times and get the same resource types in the same state.

Azure Backup can provide backup services for the following data assets:

ARM templates are JavaScript Object Notation (JSON) files that define the infrastructure and configuration for your deployment. The template uses a declarative syntax. The declarative syntax is a way of building the structure and elements that outline what resources look like without describing the control flow. Declarative syntax is different than imperative syntax, which uses commands for the computer to perform. Imperative scripting focuses on specifying each step in deploying the resources.

ARM templates are idempotent, which means you can deploy the same template many times and get the same resource types in the same state.

Azure Backup can provide backup services for the following data assets:

- opzoeken en invullen

ARM-template parameters let you customize the deployment by providing values that are tailored for a particular environment.

output-values: “You use outputs when you need to return values from the deployed resources.” source #idempotent: “Recall that ARM templates are idempotent, which means you can deploy the template to the same environment again, and if nothing changes in the template, nothing changes in the environment.” source

Stashed changes

Deploy Azure infrastructure by using JSON ARM templates

Toelichting ARM-template:

ARM templates are JavaScript Object Notation (JSON) files that define the infrastructure and configuration for your deployment. The template uses a declarative syntax. The declarative syntax is a way of building the structure and elements that outline what resources look like without describing the control flow. Declarative syntax is different than imperative syntax, which uses commands for the computer to perform. Imperative scripting focuses on specifying each step in deploying the resources.

ARM templates are idempotent, which means you can deploy the same template many times and get the same resource types in the same state.

Azure Backup can provide backup services for the following data assets:

ARM templates are JavaScript Object Notation (JSON) files that define the infrastructure and configuration for your deployment. The template uses a declarative syntax. The declarative syntax is a way of building the structure and elements that outline what resources look like without describing the control flow. Declarative syntax is different than imperative syntax, which uses commands for the computer to perform. Imperative scripting focuses on specifying each step in deploying the resources.

ARM templates are idempotent, which means you can deploy the same template many times and get the same resource types in the same state.

Azure Backup can provide backup services for the following data assets:

- opzoeken en invullen

ARM-template parameters let you customize the deployment by providing values that are tailored for a particular environment.

output-values: “You use outputs when you need to return values from the deployed resources.” source #idempotent: “Recall that ARM templates are idempotent, which means you can deploy the template to the same environment again, and if nothing changes in the template, nothing changes in the environment.” source

Stashed changes

Commandos

| commando | type | Resultaat |

|---|---|---|

| New-AzVM | Azure PowerShell | creates a VM inside your subscription |

| az-login | Azure CLI | test |

| az vm create | Azure CLI | test |

| New-AzResourceGroupDeployment | Azure PowerShell | Adds an Azure deployment to a resource group. |

Example of PowerShell command with added flags:

$today=Get-Date -Format "MM-dd-yyyy"

$deploymentName="addSkuParameter-"+"$today"

New-AzResourceGroupDeployment `

-Name $deploymentName `

-TemplateFile $templateFile `

-storageName {your-unique-name} `

-storageSKU Standard_GRSAantekeningen

Azure management options:

- Azure portal

- Azure PowerShell and Azure Command Line Interface (CLI)

- Azure Cloud Shell (web-based CLI)

- Azure mobile app

Azure Cloud Shell

Select the Cloud Shell icon (>_) to create a new Azure Cloud Shell session.

ARM

ARM-template parameters let you customize the deployment by providing values that are tailored for a particular environment.

output-values: “You use outputs when you need to return values from the deployed resources.” source #idempotent: “Recall that ARM templates are idempotent, which means you can deploy the template to the same environment again, and if nothing changes in the template, nothing changes in the environment.” source

Azure Policy with enforcement mode set to Default evaluates resource creation requests but does not automatically append tags during template deployments unless the template itself provides the required tag field. By explicitly including the tag in the ARM template, you guarantee that the deployment passes policy evaluation and the resource remains compliant.

TEMP

You must use the RemediationDescription field in the metadata section from properties to specify a custom recommendation. The remaining options are Azure policies, but do not allow specific custom remediation information.